Media monitoring with LLM assistance

| llm | media monitoring | Time to read: 4 minuter (3929 tecken)

Language models make it possible to approach media monitoring in a new way. Instead of keyword searches that try to filter out what I might be interested in, the job is handled by a language model that “assesses the news value” of my most important feeds — based on a prompt where I describe both myself and my interests.

(🇸🇪 Det finns en svensk översättning av den här texten.)

Media monitoring has been a core part of my job ever since I started as a reporter at Ny Teknik in the fall of 1999. Back then, I subscribed to press releases, browsed international publications covering the internet and technology, and gradually built more efficient workflows to streamline how I collected and filtered information.

Eventually, I developed such a solid system that the Swedish Internet Foundation even asked me to write the Internet Guide Feeds, Tweets and Status Updates – A Guide to Media Monitoring on the Internet.

In the introduction to that guide, I referenced journalist and author Nicholas Carr, who argued in a blog post that effective filters can actually intensify information overload. He claimed that good filters don’t just help us find a needle in a haystack — they create entire haystacks of needles. Everything demands our attention, and it becomes hard to know where to begin.

Looking back, I realize that one of the biggest challenges was that the filtered content still wasn’t always relevant.

Keyword monitoring with tools like Google Alerts — say, for “Ericsson” — returned way too many results. The fix was to combine keywords, either ones that should appear alongside “Ericsson” (like WAP or GPRS — those were the days!) or ones I wanted to exclude.

But the problem with keyword-based filtering is that it’s too rigid. It’s hard to capture an interest in a type of news with just a few simple rules.

At least, that was true until now. Thanks to language models, it’s finally possible to build solutions that aren’t nearly as static.

From time to time, I send out a newsletter.

Vibe Monitoring Instead of Static Rules #

When Andrej Karpathy started talking about vibe coding in February, he gave me a term for something I’d already been experimenting with for a while: using language models for environmental scanning. Or, as I now call it, vibe monitoring.

Vibe coding means describing what you want to build to a language model and letting it write the code. (Which, by the way, is something I’ve used for several small scripts and tools tailored to my own needs. If you want inspiration, this blog post by Harper Reed is a great place to start.)

Vibe monitoring works on the same principle: I trust a language model’s intuition about the kind of news I care about, based on a description of who I am and what I'm interested in.

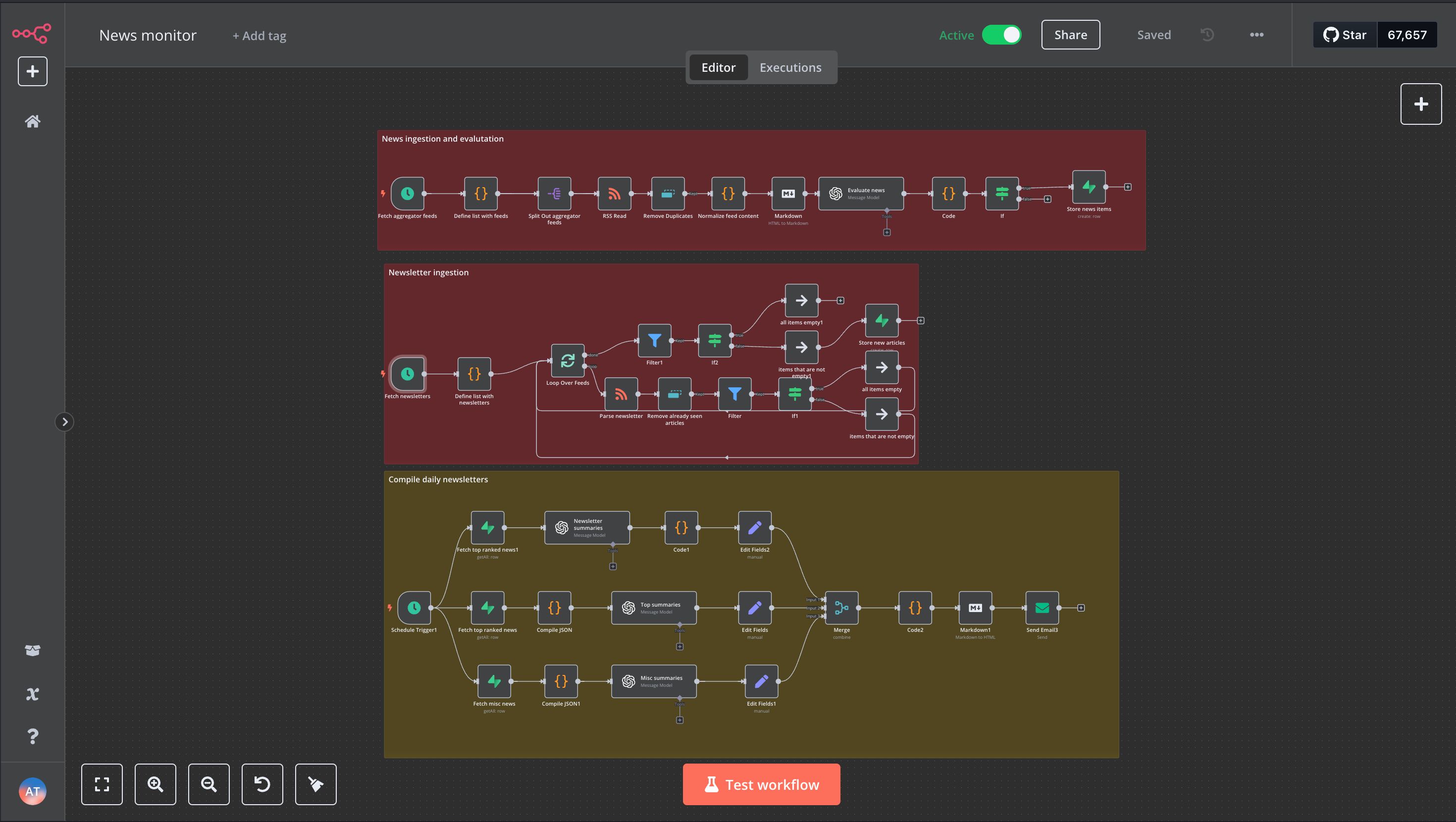

Using the automation platform n8n, I now collect newsletters and news feeds based on the same principles I’ve relied on for two decades: following people and publications that cover relevant topics and filtering out the content that matches my specific interests.

What’s different now is that the filtering isn’t done with hard-coded rules — it’s done with two prompts. Both describe who I am, what I do, the topics I care about, and which sources I tend to find useful. One prompt includes instructions to summarize content; the other is focused on deciding whether an article matches my interests. I wrote them just like I’d explain it to a colleague.

I use the summarization prompt for feeds where I know I want to read most of the content but don’t have time to go through it all. These are primarily newsletters I subscribe to, but also channels for press releases and reports from companies and organizations I care about.

The filtering prompt handles broader feeds like Techmeme, Hacker News, Wired, and The Verge. These sources cover areas I’m generally interested in, but not everything is relevant — so most articles get “discarded.”

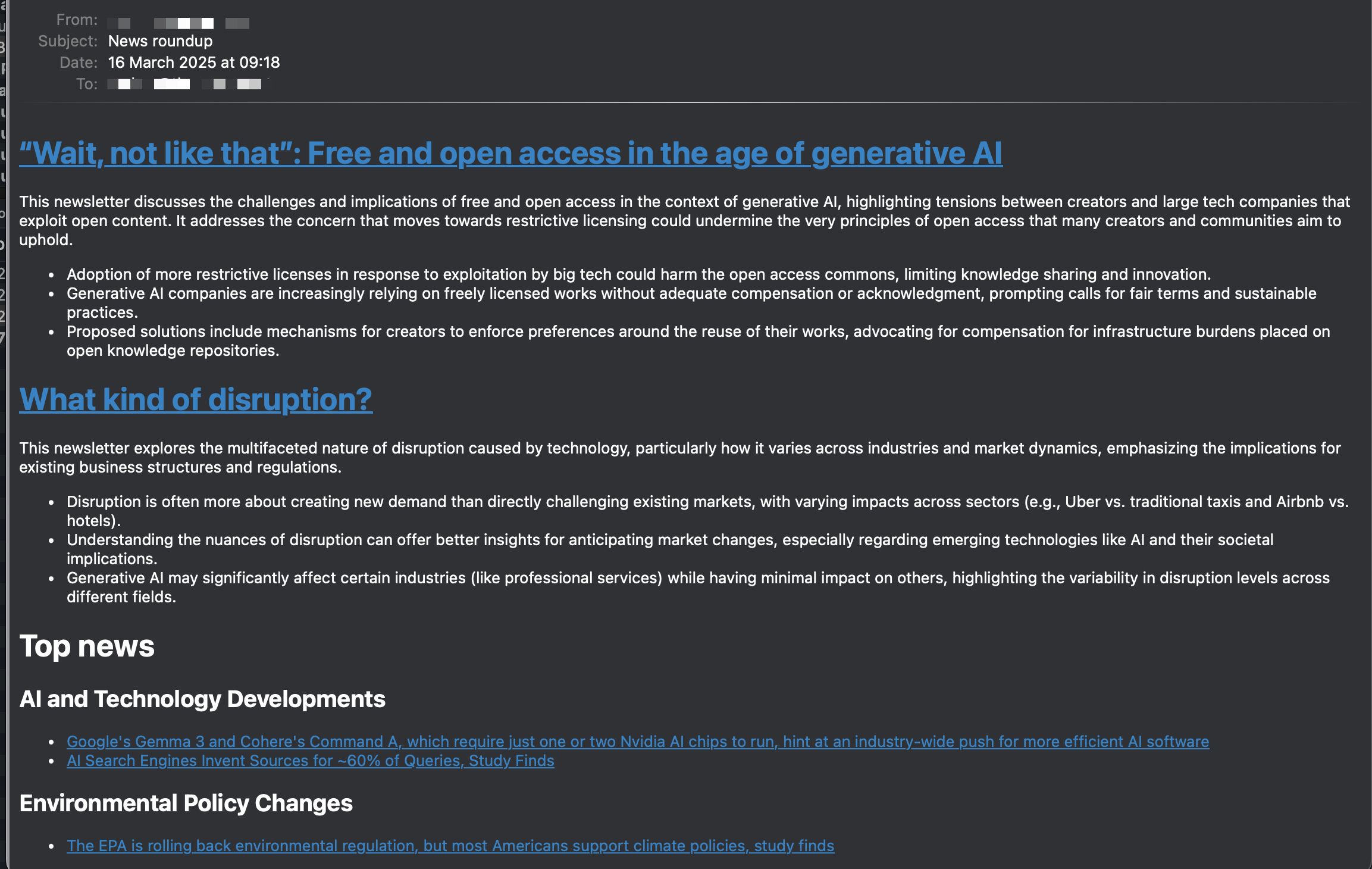

Everything the model outputs gets compiled into an email that lands in my inbox each morning, divided into three parts. The last two also group similar topics under shared headings:

- A summary of new posts from newsletters I follow.

- A section with the news my filtering prompt thinks I’ll be most interested in.

- A final section with links to content that’s less likely to be relevant.

This gives me a quick overview of what showed up in my feeds over the past day and helps me decide what to read now and what to save for later.

The cost? Practically nothing. I run my own n8n instance on a server I already rent for other purposes (though it could just as easily run locally on my laptop). And my daily OpenAI usage — with GPT-4o Mini summarizing and scoring the news — runs about 20 to 60 cents per day.

One final note — which could probably be a post of its own: This way of working is a great example of how the probabilistic nature of language models — the fact that they're built on statistics and probabilities rather than factual accuracy — isn’t necessarily a drawback. In many cases, the “creative” side of LLMs is exactly what you want. As with any tool, the key is using it for the right job.